In the world of Python programming, where efficiency and speed are essential, threading emerges as a powerful technique to supercharge your applications. Imagine having multiple hands working simultaneously to accomplish tasks swiftly, as if your program gains the ability to multitask efficiently. This blog delves into the realm of threading in Python, demystifying its concepts and benefits.

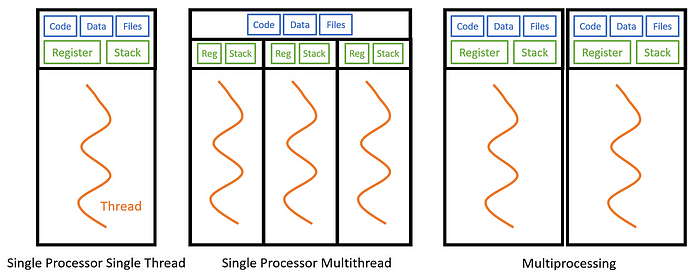

1. What is process and thread?

Process: Imagine you’re making a yummy pizza. A process in computer terms is like making that pizza from start to finish — you gather ingredients, prepare the dough, add toppings, and bake it. Each pizza you make is an individual process. Processes are like different cooking projects happening in the same kitchen.

Thread: Now, think of making drawings. When you’re drawing a picture, you might use different colors of crayons. Threads in computer terms are like using different colors of crayons to create parts of your drawing at the same time. So, if your friend is coloring the sky blue and you’re coloring the grass green, you’re both working together on the same drawing using different threads.

Process Example:

import time

import multiprocessing

# This function represents a cooking process

def cook_dish(dish_name):

print(f"Starting to cook {dish_name}")

time.sleep(3) # Pretend cooking takes 3 seconds

print(f"{dish_name} is ready!")

if __name__ == "__main__":

dishes = ["Pizza", "Burger", "Pasta"]

# Create processes for cooking different dishes

processes = []

for dish in dishes:

process = multiprocessing.Process(target=cook_dish, args=(dish,))

processes.append(process)

process.start()

# Wait for all processes to finish

for process in processes:

process.join()

print("All dishes are cooked!")

Thread Example:

import time

import threading

# This function represents a drawing thread

def draw_picture(color):

print(f"Using {color} crayon to draw...")

time.sleep(2) # Pretend drawing takes 2 seconds

print(f"Drawing with {color} is done!")

if __name__ == "__main__":

colors = ["red", "blue", "green"]

# Create threads for drawing with different colors

threads = []

for color in colors:

thread = threading.Thread(target=draw_picture, args=(color,))

threads.append(thread)

thread.start()

# Wait for all threads to finish

for thread in threads:

thread.join()

print("Drawing is complete!")

In the process example, different dishes (like Pizza, Burger, Pasta) are cooked as separate processes. In the thread example, different colors (like red, blue, green) are used to draw parts of a picture as separate threads.

So, processes are like cooking different dishes in the kitchen, and threads are like drawing different parts of a picture with different colors. Both processes and threads help computers do tasks more efficiently by doing things together!

2. What is multithreading?

Imagine you have a big plate of cookies that need to be decorated with different toppings — chocolate chips, sprinkles, and icing. You want to get this done quickly, so you decide to call your friends to help. Each friend can work on a different cookie at the same time, making the decorating process faster.

In Python, multithreading is like having friends who help you with different tasks at the same time. Instead of doing one thing after another, you can do several things together to save time.

import threading

import time

# This function decorates cookies with toppings

def decorate_cookie(cookie, topping):

print(f"Decorating {cookie} with {topping}")

time.sleep(2) # Pretend decorating takes 2 seconds

print(f"{cookie} is ready!")

# List of cookies to decorate

cookies = ["Cookie A", "Cookie B", "Cookie C"]

# List of toppings for each friend

toppings = ["chocolate chips", "sprinkles", "icing"]

# Create threads for each friend to decorate cookies

threads = []

for i, cookie in enumerate(cookies):

thread = threading.Thread(target=decorate_cookie, args=(cookie, toppings[i]))

threads.append(thread)

thread.start()

# Wait for all threads (friends) to finish decorating

for thread in threads:

thread.join()

print("All cookies are ready to enjoy!")

3. What is multiprocessing?

Imagine you have a big pile of homework assignments that need to be completed. You want to finish them quickly, so you decide to ask your friends to help. Each friend can work on a different assignment at the same time, making the whole process faster.

In programming, multiprocessing is similar to having friends who help you with different tasks, just like in the homework example. Instead of doing one thing after another, you can have multiple parts of your program working on different tasks at the same time, which can make your program run faster on computers with multiple processors or cores.

Here’s a basic explanation of multiprocessing with Python:

- Multiprocessing: In programming, multiprocessing is a technique where different parts of your program, called processes, run simultaneously to complete tasks more quickly.

Python has a module called multiprocessing that helps you achieve this. It allows you to create multiple processes that can work independently. Each process can perform its own set of tasks, which can speed up the execution of your program, especially on computers with multiple processors or cores.

For example, if you have a program that needs to perform complex calculations, you can split those calculations into different processes and have them run concurrently. This can significantly improve the performance of your program.

Here’s a simple example using the multiprocessing module in Python:

import multiprocessing

# This function calculates the square of a number

def calculate_square(number):

result = number * number

print(f"The square of {number} is {result}")

if __name__ == "__main__":

numbers = [1, 2, 3, 4, 5]

# Create processes to calculate squares

processes = []

for num in numbers:

process = multiprocessing.Process(target=calculate_square, args=(num,))

processes.append(process)

process.start()

# Wait for all processes to finish

for process in processes:

process.join()

print("All calculations are done!")

In this example, the program creates separate processes to calculate the squares of numbers. Each process works independently and calculates the square of a number. By using multiprocessing, the calculations can happen concurrently, making the program faster.

In summary, multiprocessing in Python allows you to break down your program’s tasks into separate processes that can run at the same time, improving performance and efficiency on computers with multiple processors or cores.

4. What is concurrency ? How to handle it in python?

Concurrency is a concept in programming that involves managing multiple tasks that can execute independently but potentially overlap in time. It’s like juggling multiple balls — you’re keeping them all in the air, but not necessarily focusing on just one ball at a time.

In the context of programming, concurrency is about designing your code to efficiently handle tasks that can be executed simultaneously, taking advantage of modern multi-core processors.

Python offers several ways to handle concurrency:

- Threading: Threading allows you to run multiple threads (smaller units of a program) within a single process. Threads share the same memory space and can be useful for I/O-bound tasks, like working with files, network communication, or waiting for user input.

- Multiprocessing: Multiprocessing enables you to run multiple independent processes, each with its own memory space. Processes are useful for CPU-bound tasks, like heavy computations, as they can utilize multiple processor cores.

- Asyncio (Asynchronous Programming): Asynchronous programming is a modern approach where a single thread can handle multiple tasks efficiently by switching between them when one is waiting (e.g., for I/O operations). It’s great for I/O-bound tasks and can be used with the

asynciolibrary. - Thread Pools and Process Pools: Libraries like

concurrent.futuresoffer thread pools and process pools, which manage groups of threads or processes. You can submit tasks to these pools, and they handle task execution and synchronization for you. - Synchronization Mechanisms: When dealing with concurrency, you need to ensure that threads or processes don’t interfere with each other. Synchronization mechanisms like locks (mutexes) and semaphores help manage access to shared resources to prevent race conditions and maintain data integrity.

- GIL (Global Interpreter Lock): Python’s Global Interpreter Lock allows only one thread to execute Python bytecode at a time in a single process. This means that for CPU-bound tasks, even with multiple threads, only one thread can execute Python code concurrently. However, it doesn’t impact I/O-bound tasks much.

To choose the right approach for handling concurrency in Python, consider the nature of your tasks (I/O-bound or CPU-bound), the complexity of synchronization required, and the specific libraries you’re using. For I/O-bound tasks, asyncio and threading can be effective, while for CPU-bound tasks, multiprocessing and process pools are often better choices.

Remember, concurrency is about managing tasks that can overlap in time efficiently, and Python provides a variety of tools to help you achieve this in your programs.

5. What’s the difference between concurrency and parallelism? and how to achieve them in Python?

Concurrency and parallelism are related concepts in the world of programming, but they have distinct meanings:

- Concurrency: Concurrency refers to the ability of a system to manage and execute multiple tasks in overlapping time periods, even if those tasks are not physically executing at the exact same time. It’s like juggling multiple tasks, switching between them so that each task gets a turn.

- Parallelism: Parallelism, on the other hand, involves executing multiple tasks or processes simultaneously, utilizing multiple processing units or cores of a CPU. In parallelism, tasks are truly executed concurrently, allowing for actual simultaneous execution.

In simpler terms, concurrency is about managing tasks efficiently in an interleaved manner, while parallelism involves executing tasks truly at the same time.

Achieving Concurrency and Parallelism in Python:

- Concurrency in Python:

- Concurrency can be achieved using threading or asynchronous programming with the

asynciomodule. threading: Python's built-inthreadingmodule allows you to create and manage threads, which can run concurrently. Threads are suitable for I/O-bound tasks that involve waiting, such as network requests or reading files.asyncio: Theasynciomodule enables asynchronous programming, allowing you to write non-blocking code using coroutines. It's great for I/O-bound tasks and can provide concurrency without the overhead of multiple threads.

- Concurrency can be achieved using threading or asynchronous programming with the

- Parallelism in Python:

- Achieving true parallelism often involves using the

multiprocessingmodule, as it enables separate processes to run in parallel. Each process runs in its own memory space and can utilize multiple CPU cores. - The GIL (Global Interpreter Lock) in CPython restricts true parallelism in multi-threaded programs, especially for CPU-bound tasks. Therefore, if you need parallelism for CPU-bound tasks,

multiprocessingis recommended.

- Achieving true parallelism often involves using the

It’s important to note that while concurrency can be achieved with both threading and asynchronous programming, parallelism requires the use of processes via the multiprocessing module to truly run tasks in parallel. The choice between concurrency and parallelism depends on the nature of your tasks, the hardware you're using, and the specific requirements of your application.

6. What is semaphore and mutex?

Imagine you and your friends are drawing on a big piece of paper together. Sometimes, you all want to use the same set of crayons, but there’s only one set available. To make sure everyone gets a chance to use the crayons without messing up each other’s drawings, you use a “talking stick.” Only the friend holding the talking stick can use the crayons, and when they’re done, they pass the stick to the next friend.

In computer programs, a semaphore is like that talking stick. It helps different parts of a program take turns using shared resources, like a set of crayons. When one part is using the resource, it holds the semaphore, and others have to wait until it’s done.

Now, imagine you and your friend want to play with a toy together. But there’s a rule: only one of you can hold the toy at a time. To make sure you don’t both grab the toy at once and fight over it, you use a “special key.” When one friend wants to play with the toy, they take the key, use the toy, and when they’re done, they put the key back so the other friend can use it.

In computer programs, a mutex (short for “mutual exclusion”) is like that special key. It helps control access to shared resources, so only one part of the program can use the resource at a time. It’s a way to prevent things from getting messy when multiple parts of the program want to use the same thing.

So, both a semaphore and a mutex are like tools that help friends (or parts of a program) take turns and share things without causing problems or fights. They keep everything organized and fair!

7. Is mutex and semaphore related to the threading in python?

Mutex and Semaphore are synchronization mechanisms used in multithreaded programs to control access to shared resources and ensure that multiple threads don’t interfere with each other when they’re working with the same data.

- Mutex: As I explained earlier, a mutex is like a special key that ensures only one thread can access a shared resource at a time. It’s a way to prevent conflicts and data corruption. In Python, you can use the

threading.Lock()class to create a mutex lock that threads can use to control access to shared data. - Semaphore: A semaphore is similar to a mutex but can allow a certain number of threads to access a shared resource simultaneously. It’s like having multiple talking sticks, so a limited number of threads can access the resource together. Semaphores are helpful when you want controlled parallelism, like allowing only a specific number of threads to access a resource that might get overwhelmed if too many access it at once.

In Python, you can use the threading.Semaphore() class to create semaphores that manage access to shared resources among threads.

Both mutexes and semaphores are crucial tools when working with threads to ensure data integrity, prevent race conditions, and control how threads interact with each other. They help make sure that your threaded programs run smoothly and produce the correct results, just like your friends taking turns with the talking stick and special key in the examples I mentioned earlier.

8. What’s the difference between semaphore and mutex and when to use what?

let’s dive into the differences between semaphore and mutex with a clear example.

Semaphore:

- A semaphore allows a certain number of threads to access a shared resource simultaneously.

- It’s like having multiple keys to a room, and a limited number of people can enter the room at the same time.

- Semaphores are used when you want to control parallelism and limit the number of threads that can access a resource.

Mutex:

- A mutex (short for “mutual exclusion”) allows only one thread to access a shared resource at a time.

- It’s like having a special key that only one person can have at a time to unlock a room.

- Mutexes are used when you want to ensure that only one thread can modify a resource to prevent data corruption and conflicts.

Let’s look at an example to understand the difference:

Imagine a playground with a slide and a swing. There are three children who want to play on the slide, and two children who want to swing. But you don’t want too many children on the slide or the swing, or there might be chaos!

Here’s how you could use a semaphore and a mutex to control this situation:

Semaphore Example:

import threading

# Create a semaphore with 2 "slots" for the swing

swing_semaphore = threading.Semaphore(2)

# Function for swinging

def swing(child):

swing_semaphore.acquire()

print(f"{child} is swinging!")

# Simulate swinging

threading.Event().wait()

print(f"{child} is done swinging!")

swing_semaphore.release()

# Create threads for children on the swing

for i in range(2):

threading.Thread(target=swing, args=(f"Child {i+1}",)).start()

# Create a mutex for the slide

slide_mutex = threading.Lock()

# Function for sliding

def slide(child):

with slide_mutex:

print(f"{child} is sliding!")

# Simulate sliding

threading.Event().wait()

print(f"{child} is done sliding!")

# Create threads for children on the slide

for i in range(3):

threading.Thread(target=slide, args=(f"Child {i+3}",)).start()

In this example, the semaphore ensures that only two children can swing at the same time, while the mutex ensures that only one child can slide at a time. This helps control the number of children on the swing and slide simultaneously.

So, in summary:

- Use a semaphore when you want to control how many threads can access a resource simultaneously, like limiting the number of children on the swing.

- Use a mutex when you want to ensure that only one thread can access a resource at a time, like allowing only one child on the slide.

Both tools are essential for managing shared resources in threaded programs, and the choice depends on the specific requirements of your program.

9. What is race condition and how to prevent it?

A race condition is a common issue that can occur in multithreaded or parallel programs when two or more threads access shared resources (like variables, data structures, or files) concurrently and at least one of them modifies the resource. The result of a race condition is unpredictable behavior and incorrect output because the final outcome depends on the timing and order of thread execution.

Imagine you and your friend are drawing on the same piece of paper at the same time. If you both try to draw different things in the same spot at once, the result might not make sense because your drawings overlap.

To prevent race conditions, you need to make sure that shared resources are accessed in a controlled and coordinated manner. Here are some strategies:

- Use Locks (Mutexes): A mutex (short for “mutual exclusion”) is like having a key to access a resource. Only one thread can have the key at a time, which prevents multiple threads from modifying the resource simultaneously. Lock the resource before accessing it and unlock it when you’re done.

- Use Semaphores: As explained earlier, semaphores can control the number of threads that can access a resource simultaneously. This helps prevent too many threads from interacting with the resource at once.

- Use Critical Sections: A critical section is a part of your code where a shared resource is being accessed. Protect this section using locks or other synchronization mechanisms to ensure only one thread can execute it at a time.

- Use Thread-Safe Data Structures: Some programming languages provide thread-safe data structures that handle synchronization internally, reducing the chances of race conditions. For example, Python’s

queue.Queueis thread-safe for adding and removing items. - Use Atomic Operations: Some operations can be performed atomically, meaning they can’t be interrupted by other threads. For example, updating a variable with a single value can be an atomic operation.

- Minimize Shared Data: If possible, design your program in a way that threads don’t need to access shared resources too often. Reducing shared data can reduce the likelihood of race conditions.

Remember, preventing race conditions is about making sure that threads don’t interfere with each other’s work when accessing shared resources. By using proper synchronization mechanisms, you can ensure that your multithreaded program behaves predictably and produces correct results.

10. What is thread pool?

Imagine you have a group of friends who are really good at building sandcastles. You want to build a big sandcastle village, and you know that it would be faster if all your friends help out. Instead of calling them one by one whenever you need help, you create a special area at the beach where your friends are always ready to build sandcastles whenever you give them sand and a plan.

In programming, a thread pool is like that group of friends who are always ready to help with tasks. Instead of creating and starting threads each time you want to do something concurrently, you have a “pool” of pre-created threads ready to take on tasks when needed. This can save time and resources compared to starting threads from scratch every time.

Here’s a simple example using Python’s concurrent.futures.ThreadPoolExecutor:

import concurrent.futures

import time

# This function represents a task that needs to be done

def do_task(task_name):

print(f"Starting task: {task_name}")

time.sleep(2) # Pretend the task takes 2 seconds

print(f"Task {task_name} is done!")

if __name__ == "__main__":

tasks = ["Task A", "Task B", "Task C"]

# Create a thread pool with 2 threads

with concurrent.futures.ThreadPoolExecutor(max_workers=2) as executor:

# Submit tasks to the thread pool

future_to_task = {executor.submit(do_task, task): task for task in tasks}

# Wait for all tasks to complete

for future in concurrent.futures.as_completed(future_to_task):

task = future_to_task[future]

print(f"{task} has completed.")

In this example, the ThreadPoolExecutor creates a pool of threads with a maximum of 2 threads (just like having 2 friends ready to build sandcastles). The tasks (like Task A, Task B) are then submitted to the thread pool. The pool manages the threads and automatically assigns tasks to available threads.

Using a thread pool can be more efficient than starting individual threads for each task, especially when you have a bunch of tasks to do. It’s like having a team of friends ready to help you build sandcastles whenever you need, making things quicker and more organized!

So, a thread pool is a way to manage and reuse a group of threads to handle tasks efficiently in a concurrent program.

Conclusion

In the world of programming, whether you’re building games, solving complex problems, or managing resources, understanding threads, processes, and synchronization mechanisms is crucial. Threads and processes are like your buddies who help you get things done efficiently, whether it’s building sandcastles on a digital beach, cooking up delicious dishes, or coloring a masterpiece. However, just like friends working together, you need rules and tools to avoid chaos. That’s where synchronization techniques like mutexes and semaphores come in — they’re like your talking stick and special key, ensuring that everyone takes turns and plays nice with shared resources.

As you dive into the world of multithreading, multiprocessing, and synchronization, you’re equipping yourself with the ability to harness the power of modern computers to solve problems faster and smarter. Whether you’re creating games, optimizing software, or exploring new frontiers, these concepts will be your trusty companions, guiding you through the exciting journey of programming adventures!

Did you enjoy this article? If so, get more similar content by subscribing to Coding With Krp Ajay, my YouTube channel!. Thanks for reading. Happy coding.